Many engineered systems have become too complex for humans to optimize. Circuit design has long depended on CAD, and more recently, automated methods for software design have also started to emerge. Design of machine learning systems, i.e. deep neural networks, have also reached this level of complexity where humans can no longer optimize effectively. For the last few years, Sentient has been developing methods for neuroevolution, i.e. using evolutionary computation to discover more effective deep learning architectures. This research builds on over 25 years of work at UT Austin on evolving network weights and topologies, and coincides with related efforts e.g. at OpenAI, Uber.ai, DeepMind, and Google Brain.

There are three reasons why neuroevolution in particular is a good approach (compared with other methods such as Bayesian parameter optimization, gradient descent, and reinforcement learning): (1) Neuroevolution is a population-based search method, which makes it possible to explore the space of possible solutions more broadly than other methods: i.e. instead of having to find solutions through incremental improvement, it can take advantage of stepping stones, thus discovering surprising and novel solutions. (2) It can utilize well-tested methods from the Evolutionary Computation field for optimizing graph structures to design innovative deep learning topologies and components. (3) Neuroevolution is massively parallelizable with minimal communication cost between threads, thus making it possible to take advantage of thousands of GPUs.

This section showcases three new neuroevolution papers from Sentient, reporting the most recent results. The main point is that neuroevolution can be harnessed to improve the state of the art in deep learning:

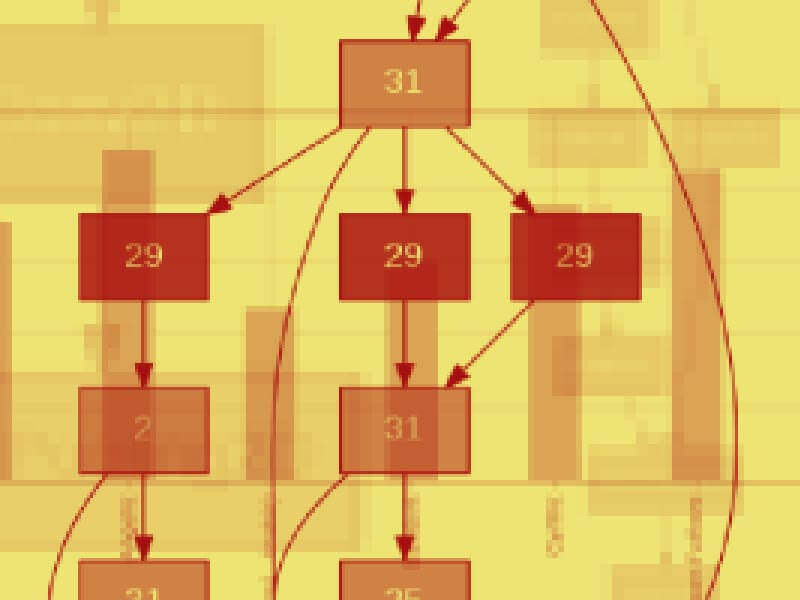

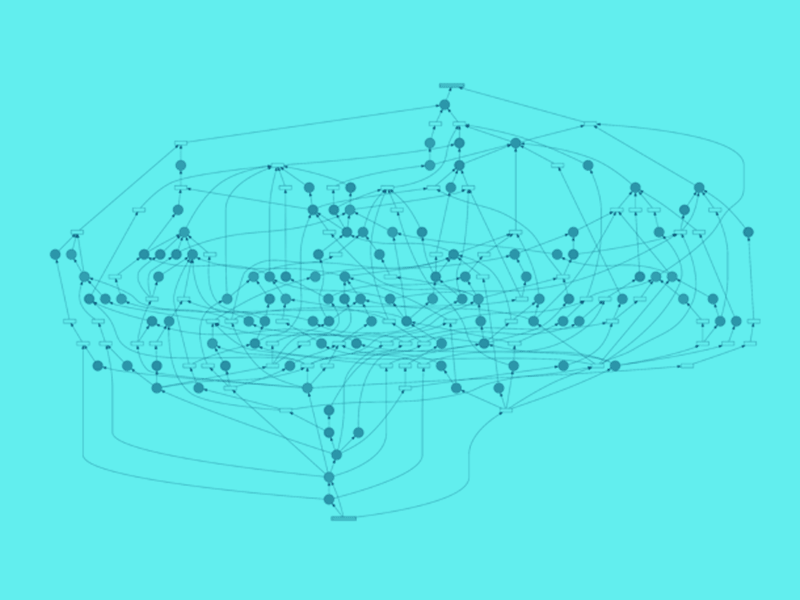

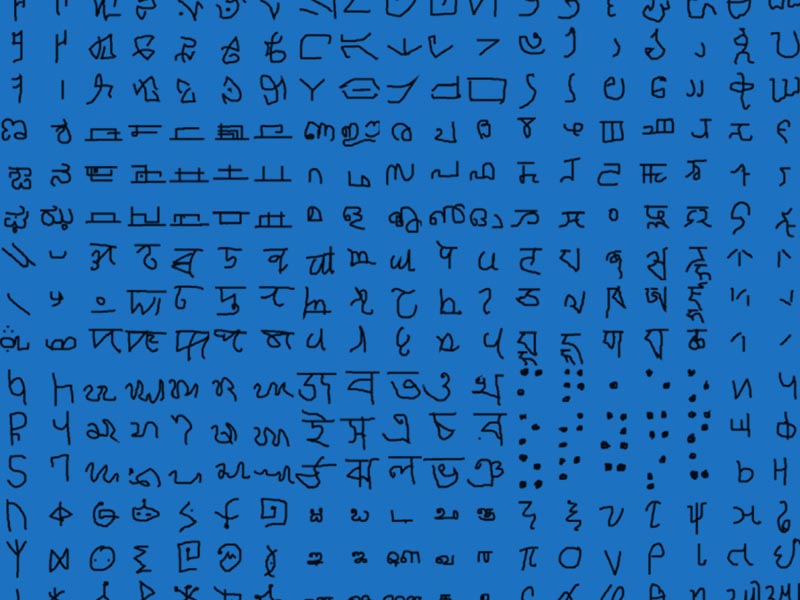

- In the Omniglot multitask character recognition domain, evolution of hyperparameters, modules, and topologies improved error from 32% to 10%. Two new approaches were introduced: Coevolution of a common network topology and components that fill it (the CMSR method; Demo 1.1), and evolution of different topologies for different alphabets with shared modules (the CTR method; Demo 1.2). Their strengths were combined in the CMTR method (Demo 1.3) to achieve the above improvement (Paper 1).

- In the CelebA multitask face attribute recognition domain, state of the art was improved from 8.00% to 7.94%. This result was achieved with a new method, PTA, that extends CTR to multiple output decoder architectures (Paper 2).

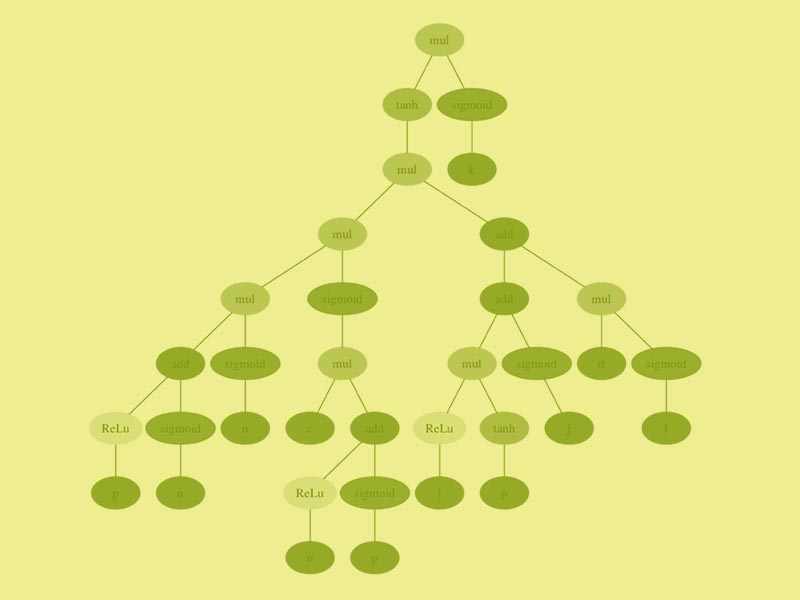

- In the language modeling domain (i.e. predicting the next word in a language corpus), evolution of a gated recurrent node structure improved performance 10.8 perplexity points over the standard LSTM structure—a structure that has been essentially unchanged for over 25 years!. The method is based on tree encoding of the node structure, an archive to encourage exploration, and prediction of performance from partial training (Paper 3; Demo 3.1).

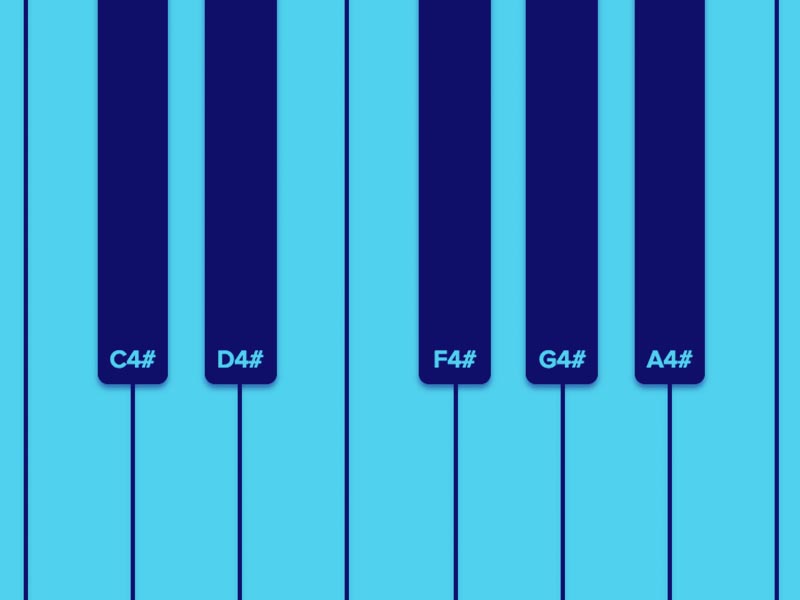

These results are reported in detail in the papers, comparing to other methods and previous state-of-the-art. This section illustrates these results in several animated and interactive demos: Demos 1.1, 1.2, and 3.1 illustrate the technology, with animations on how evolution discovers network topologies, modules, and node structures, and how those discoveries improve its performance. Another set illustrates the resulting performance: How does the AI perceive the characters you draw (Demo 1.3)? Which celebrity faces match best the attribute values you specify (Demo 2.1)? How do the evolved gated recurrent networks improvise on a musical melody you start (Demo 3.2)?

We hope you enjoy the papers and demos. If you’d like to get started on your own experiments on deep neuroevolution, we’ll provide a starting point software here soon. Other related software is provided e.g. by OpenAI, Uber.ai, UT Austin, and UCF, Enjoy!

Paper 1: Liang, J., Meyerson, E., and Miikkulainen, R. (2018). Evolutionary Architecture Search for Deep Multitask Networks. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO-2018, Kyoto, Japan).