Our research explores the transformative potential of decision AI, enabling systems to go beyond predictions to prescribe real-world actions. Through scientific papers, open-source tools, AI for Good projects, and our advanced platforms, we inspire collaboration and drive meaningful progress across the AI community.

WHAT'S NEW

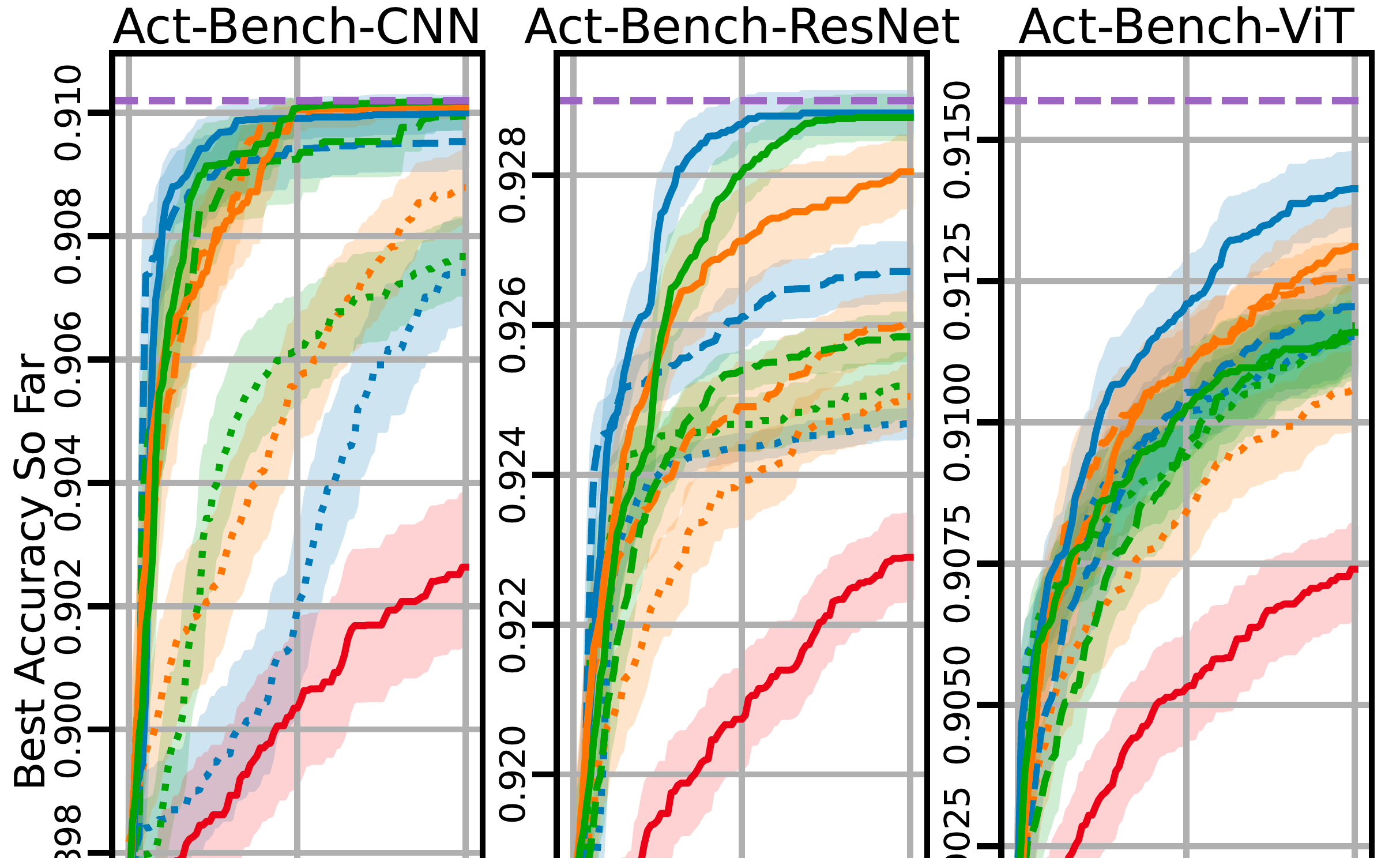

Explore our latest publications

Our research is published in academic papers, advancing AI technologies that improve decision-making and solve complex global challenges.

See innovation in action through our demos

We collaborate with domain experts to apply our research to real-world problems, allowing us to develop better AI methods while maximizing human potential.

Our latest media coverage

Discover the latest media and press updates from our team on AI’s transformative role across various fields and applications.

Connect with us

Reach out to us at evolutionmlmail@gmail.com for inquiries or collaboration opportunities. Follow us on social for research updates, AI insights, and new innovations from the decision AI team.