Introduction

Deep learning (DL) has transformed much of AI, and demonstrated how machine learning can make a difference in the real world. Its core technology is gradient descent, which has been used in neural networks since the 1980s. However, massive expansion of available training data and compute gave it a new instantiation that significantly increased its power.

Evolutionary computation (EC) is on the verge of a similar breakthrough. Importantly, however, EC addresses a different but equally far-reaching problem. While DL is focused on modeling what we already know, EC is focused on creating solutions that do not yet exist. That is, whereas DL makes it possible to recognize e.g. new instances of objects and speech within familiar categories, EC makes it possible to discover entirely new objects and behaviors—those that maximize a given objective. EC does it not by following a gradient (like most DL and reinforcement learning approaches), but by doing massive exploration: using a population of candidates to search the space of solutions in parallel, emphasizing novel and surprising solutions. Thus, EC makes a host of new applications of AI possible: designing more effective and economical physical devices and software interfaces; discovering more effective and efficient behaviors for robots and virtual agents; creating more effective and cheaper health interventions, growth recipes for agriculture, and mechanical and biological processes.

Like the basic ideas of neural networks, the basic ideas of EC have existed for decades—once instantiated to take advantage of the increased data and compute, they stand to gain in a similar way as DL did. Recent progress in novelty search, multiobjective optimization, and parallelization are indeed essential ingredients. Building on this momentum, this website introduces five new papers, and highlights four background papers, that scale up evolution further. The papers illustrate three different aspects of modern EC:

I. Neuroevolution: Improving Deep Learning with Evolutionary Computation

Much of the power of DL comes from the size and complexity of the networks. Their architecture, i.e. network topology, modules, and hyperparameters, can be optimized through evolution beyond human ability to do so. We demonstrate this idea by producing new state-of-the-art results in two multitask learning domains, i.e. Omniglot character recognition and CelebA face attribute recognition, as well as the standard sequence processing benchmark of language modeling. Interactive demos illustrate the tasks and the performance of the complex networks, and graphical visualizations help understand how evolution discovers these solutions.

II. Commercializing Evolutionary Computation

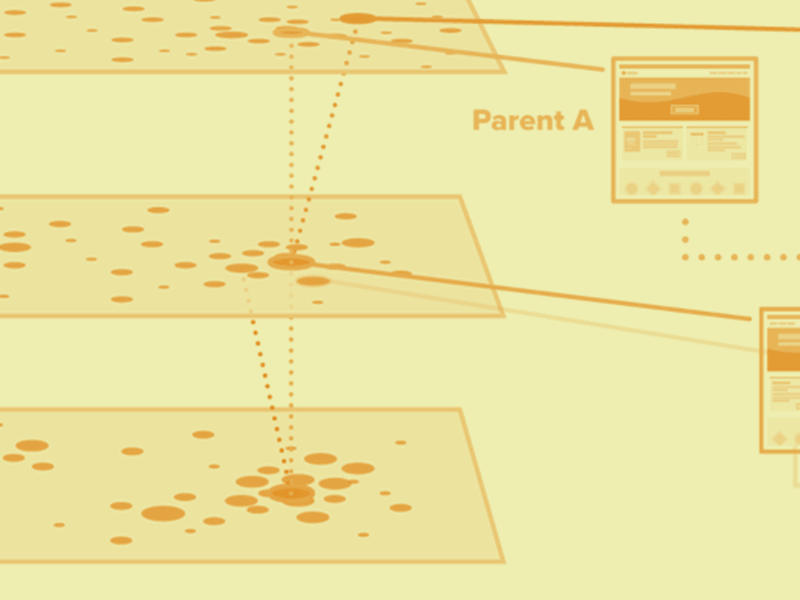

Evolutionary computation can be industrialized to discover new solutions routinely in real-world applications. We demonstrate this ability in Sentient Ascend, where evolution optimizes web interface designs to maximize conversions. This application requires solving an important scientific problem: how candidates can be evaluated reliably in uncertain environments. Graphical visualizations demonstrate how more reliable candidates emerge in evolution, and how multi-arm bandit algorithms can be used to select the winner more reliably, and how performance can be maximized throughout the discovery process.

III. Solving Hard Problems with Evolutionary Computation

Much of the progress on EC has focused on challenging optimization problems that cannot be solved using traditional nonlinear optimization techniques. Most recent EC techniques are designed to take advantage of the large amount of computational power that is now available. This paper introduces such a technique: using composite objectives to define a useful search space, and novelty selection to explore it effectively. Other (earlier) papers suggest how such evolutionary processes can be run on massively parallel compute. Demos illustrate how the techniques can discover good solutions in deceptive search spaces, matching state-of-the-art results on minimal sorting networks.

The results in these papers contribute to the momentum that is building up around EC, including recent results by research groups at OpenAI, Uber.ai, DeepMind, Google, BEACON, etc. We invite you to join this exciting process, as a customer or user of the technology, or as a researcher or developer. To get started, check out Demo 1 below, introducing the basic ideas of evolutionary computation. Next, explore the Neuroevolution, Commercialization and Hard Problems sections on this site, including the 10 visualization demos illustrating the technology and three interactive demos allowing you to engage with it. Then, perhaps try some experiments of your own, for example, using software by GMU, OpenAI, Uber.ai, UT Austin, UCF (and by Cognizant, to be announced soon).

Contributing Researchers

Babak Hodjat: Team Lead; PhD Kyushu University 2003

Risto Miikkulainen: Research Lead; Professor of Computer Science at UT Austin; PhD UCLA 1990

Hormoz Shahrzad: Senior Research Scientist

Xin Qiu: Senior Research Scientist: PhD National University of Singapore 2016

Jason Liang: Research Scientist; PhD UT Austin 2018

Elliot Meyerson: Research Scientist; PhD UT Austin 2018

Aditya Rawal: Research Scientist; PhD UT Austin 2018