This section lists various open-source

software packages available from the Evolutionary AI

team. These are available for research purposes only; for

commercial use, contact

info@evolution.ml.

Unless otherwise specified, the packages are provided "as-is",

i.e. as concrete implementations of technologies described in

our research papers.

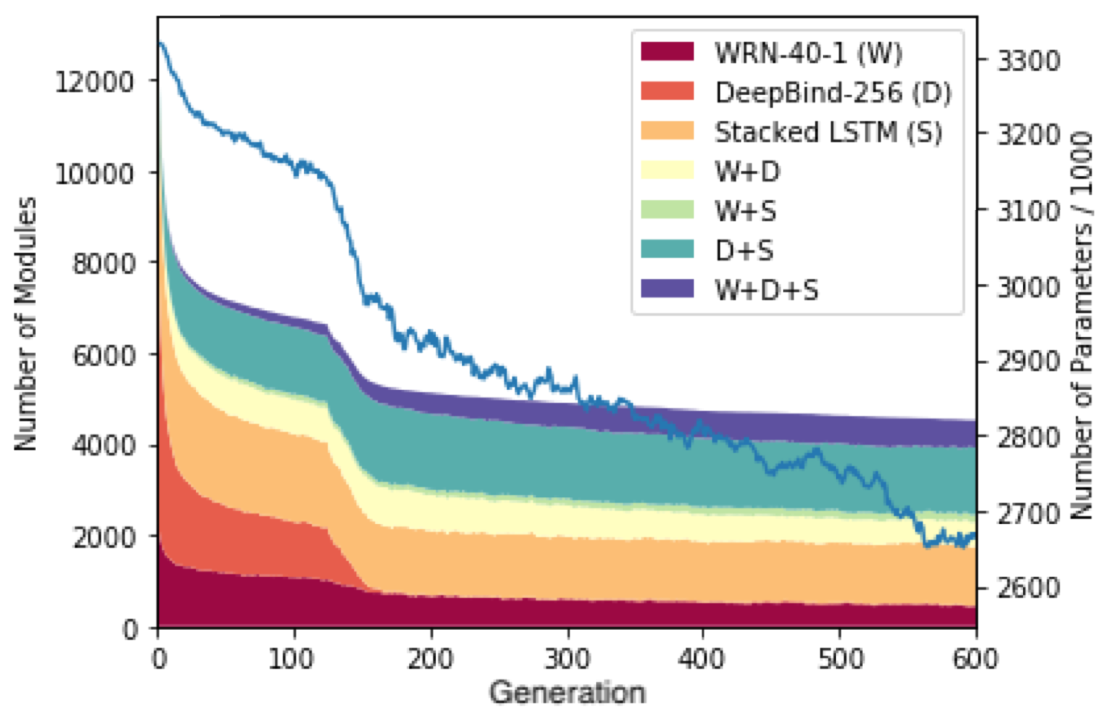

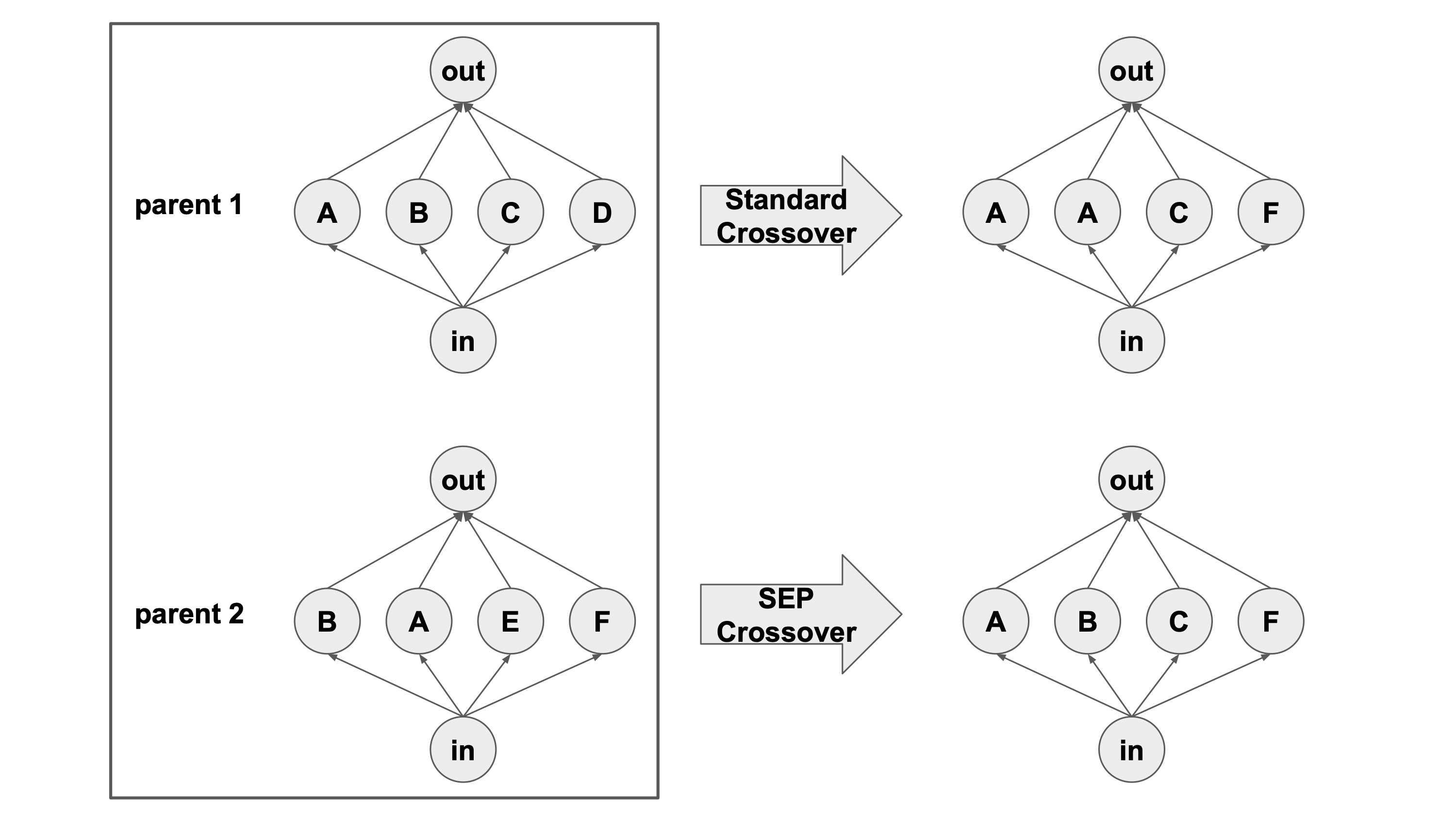

SEPX: solving the permutation problem in NAS (Code, Paper)

Shortest Edit Path Crossover (SEPX) directly recombines architectures in the original graph space, overcoming the permutation problem in traditional evolutionary neural architecture search (NAS). Its advantage over Reinforcement Learning (RL) and other methods is proved theoretically and verified empirically.

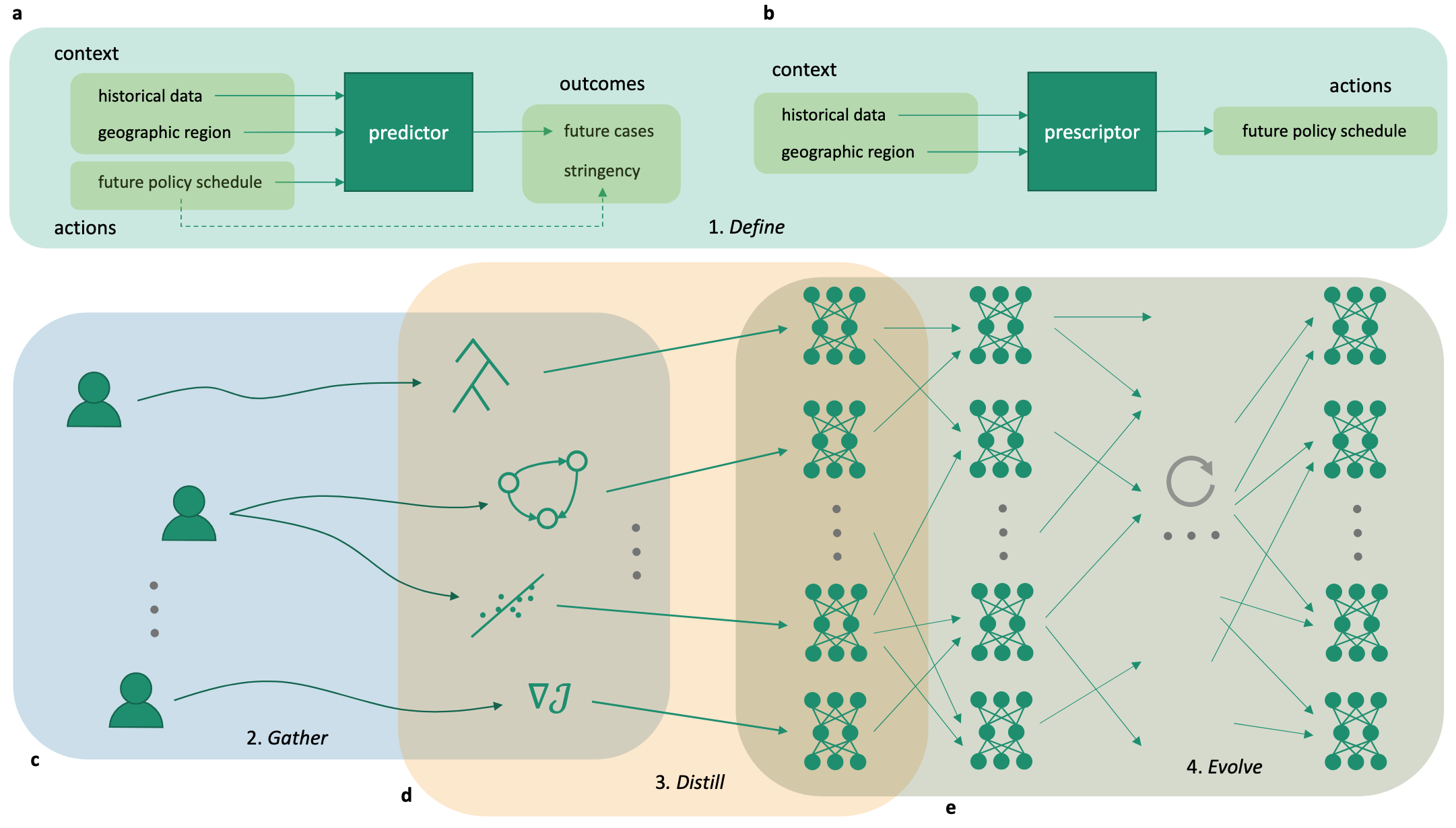

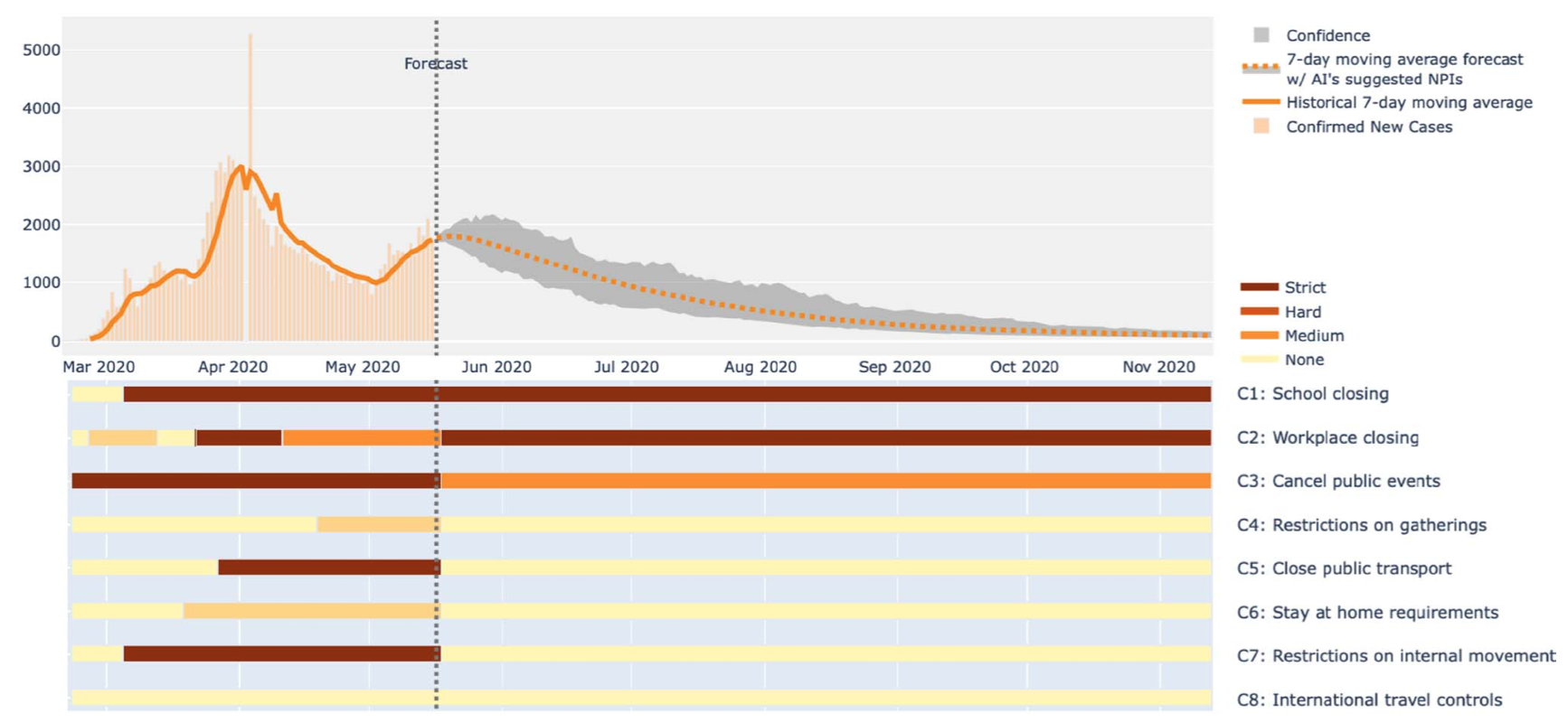

XPRIZE Pandemic Response Challenge (Code, Paper)

In this competition, predictors were developed to forecast number of cases and prescriptors to recommend non-pharmaceutical interventions to cope with the COVID-19 pandemic. The package includes sample predictors and prescriptors and their evaluation code (see the competition tech page for details).